After extension, I need to take it out and put on ice box, and then open the lid to add EDTA. Then put back the membrane and seal it. Then stir and mix. Then spin down. This process takes another 30s or so.

In total, the error in terms of time measurement is ~1min, not including inaccuracy in temperature measurement. If you look at the time course curves, the data points at <1min are always very messy. That's because the time I'm measuring is smaller than error. If we further decrease the time interval, we will only get messier data.

Please also be advised that means time interval means more time points, more labor, longer time and higher reagent cost.

RC: Yes, I'm aware of the difficulty of higher rate sampling and I noticed the issues with those data points. However, you should not assume that 5 s sampling is the only improvement that can be made to the protocol (or that 5 s is the recommended value, since I may not have accurately recalled the precise number recommended). You should discuss to determine what the implications of the simulations are for the experiments, since the usability of the data relies on certain assumptions being satisfied, and it is better to know earlier what these implications and assumptions are. The simulations will be part of the paper, so a consistent story must be generated. It is also possible that the latest conclusions from the simulations has changed since I last received an update, and the issue with accurate initial rate estimation is less severe than I was initially told. I am conveying this information now so you do not find later that you did a lot of work that has to be repeated.

RC (2/19): Some of the recent simulations suggest that initial rate measurements may be advisable at 5 second intervals during the initial stage of the reaction (i.e. sampling more finely, if possible, at that stage, to get a better initial rate estimate). Since this may affect the experiments you are running/just ran, you can more information about this from Karthik who is available to connect again on the extension paper issues that you and he were discussing. Since both of you may have other work underway you can arrange a mutually convenient time to share info; just bear in mind it may affect your experiments.

Raj

CJ (2/10/14)

For Taq polymerase, NEB recommends 0.5–2.0 units per 50 µl reaction, ideally 1.25 units/50uL. The Specific Activity of Taq Polymerase = 292000 units/mg, which means 1 unit = 0.036 pmol. So 1.25U/50uL = 0.9nM.

RC (2/6): Please provide the typical enzyme concentration in PCR in nM units to Karthik.

CJ 1/27/2014

Attached please find a 'step-by-step procedure' for building a user-defined equation using Prism.

Some notes:

- I did not encounter any bug or problem. So no debugging information can be provided.

- There is no guarantee that given any equation and data, I can work out the curve fitting within 1-2h. The time needed varies from case to case.

- I’m following a built-in equation, so I know how to setup the initial values and constraints. With a new equation, we can start with the same setting. But I will need to fine tune the initial values and constraints before getting satisfactory fitting. This part of work MUST BE DONE WITH APPROPRIATE DATA. Without data, it is not possible for me to predict what initial values to use for each parameter in each different equation.

CJ 1/16/2014

About the processivity data: as mentioned in Raj's recent manuscript:

Literature data on Taq processivity at 72 C: E[ioff]=22 (reference: Wang et al., A novel strategy to engineering DNA polymerases for enhanced processivity and improved performance. Nucl. Acids Res. 32: 1197-1207).

The corresponding microscopic processivity for Taq is 0.95.

The experimental condition for this result is:10mM Tris-HCl pH8.8, 2.5mM MgCl. 50nM template, 0.005-0.1nM Taq, 250uM dNTP.

They did not vary [N] so it is hard to tell whether pocessivity depends on [N] or not.

Do we need more processivity data from literature? If so are we only interested in Taq, or also other polymerases? Knowing these information would save us time on literature searching.

RC (1/16): We're mostly interested in Taq, but we'd like to know whether there is any info regarding the effect of reaction conditions on processivity, since that would reveal something about the dissociation mechanism (as indicated in the notes). If you have already concluded that there is no information about this, or that processivity assays are always carried out under standardized conditions on an enzyme-specific basis, please let us know. In any case, the above info will be useful to Karthik in determining k-1 using processivity data.

Regarding Raj's question: RC: I believe you had a good linear fit for kcat/Kn(T)?

CJ (1/16/14): I never did linear fit. Before we toss in Sudha's old data, I did nonlinear MM fitting to get kcat and Kn. Those curves are well fitted. Then we added Sudha's data and did two variable nonlinear fit. For the reason I explained before, this fitting did not work well.

RC: I meant the Arrhenius fitting shown in the paper for the temperature dependence, with regarding to the question on whether we need to do new experiments varying [N] at 72C in addition to the ones already done at 70C. In the context of the two-variable fitting, you can consider this and let me know what you decide.

CJ(1/16/14): I see. So before we added Sudha's data the Arrehnius plot works well. After that, it is hard to say because I did not get any reliable parameters. And there's only 4 temperature points (instead of 6 as used before) that are common for Sudha's and my data. I'm not sure whether the Arrehnius plotting comming from single-variable fitting can be useful in two-variable fitting.

CJ 1/15/2014

I've read through Raj's posting on 1/13/14 including the attached file. I generally understand what we need to do in the next step. I will collect literature record on processivity and corresponding conditions and prepare a report hopefully by the end of this week. Meanwhile I have a couple of questions regarding the file Raj uploaded on 1/13/14.

(1) On page 1, it says 'Plot p_off(i) vs i; log p is slope. ...' I think it should be log(p_off(i)) that is to be plotted vs i.

RC: Yes.

(2) On page 4, second paragraph from top, it says 'We consider two CT systems that can produce the observed distribution..." What is a CT system?

RC: continuous time system - ie one framed in terms of reaction rates - as opposed to discrete system that was studied in previous treatments of processivity (e.g. by von Hippel). These systems allow us to get relative or absolute rate constants based on processivity data.

(3) On page 7, equation (1), I'm not quite sure what is (E.Di+1)'. People usually use ' symbol to indicate a closed conformation of polymerase-template-dNTP complex. After nucleotide incorporation, this complex will become polymerase-template-PPi. Then there will be a conformational change to come back to open state before ejecting PPi. After PPi leaves, the complex would remain in open conformation and therefore is usually indicated as (E.Di+1) without the prime symbol. Based on this mechanism, there should be no such species as (E.Di+1)'.

RC: I used this symbol before receiving the papers which used it to denote the closed conformation. I used it to refer to the intermediate state after nucleotide addition and prior to translocation. In these simplified models I did not include PPi dissociation. As noted the model with translocation may need to be modified based on the latest literature information, in particular the relative magnitudes of the various rate constants. The model I used was based on a reading of von Hippel's description of translocation.

(4) On page 7 at the bottom, there's a redundant paragraph. You may want to delete it.

(5) On page 15 at the bottom, you say Keq,1 should be replaced with an expression with k1, k-1, kcat, Kn etc. k-1 can be derived from k1 and Keq,1, I understand this. But how do we get k1? We use literature value? Or it is an additional parameters that needs to be determined by curve fitting?

RC: It is determined by curve fitting using time series data of the type you have obtained, as I believe may have been discussed in the parameter estimation section. This applies in the absence of processivity data. In the presence of processivity data, the MM fitting should give all unknown parameters.

(6) You ask me and Karthik to meet to discuss about the curve fitting using different models. As I have explained, our current data set does not work well with any model. In such case what should we discuss during the meeting?

RC: The strategy for going forward and subdivision of labor should be discussed. You should tell him about your literature review on mechanistic models. You should also tell him about the conditions under which processivity is used measured as noted below. Also, Karthik can use processivity data to predict the enzyme dissociation rate constant under model 1, and hence combine this info with Keq(T) from Datta to get the association rate constant as well. Based on these he can simulate the whole system. He can test the validity of MM steady state assumptions with this model as well.

(7) For additional experiments, do we want to do it at 72C (this is where we have processivity data) or 70C (we already have data with fixed template concnentration for this temperature, but not for 72C)?

RC: I believe you had a good linear fit for kcat/Kn(T)?

CJ (1/16/14): I never did linear fit. Before we toss in Sudha's old data, I did nonlinear MM fitting to get kcat and Kn. Those curves are well fitted. Then we added Sudha's data and did two variable nonlinear fit. For the reason I explained before, this fitting did not work well.

CJ 1/14/2014

In the attached spreadsheet is a list of DNA polymerase kinetic parameters I found on literature. The 5 references are also attached, 1 reference is missing because we do not have access to full text. The rate constant k1 - k8 are explained in the generic model pasted on the right side of the list. The models in the six references are mostly the same. None of them considered the translocation as a separate step. Experimentally, rate constant for translocation is combined with k6 and k-6.

kinetic parameter in literature.xls

Zahurancik 2013.pdf

I also found a couple of recent papers discussing the translocation as a separate step. All of them used single-molecule technology and their models are substantially different from the one discussed above so I did not include their results in the spreadsheet.

Lieberman 2013.pdf

Wang 2013.pdf

Maxwell 2013.pdf

The Wang 2013 paper may be of particular interest because they observed that during translocation, there are two states, one weakly bound and one strongly bound. This finding may be related to the salt-dependent and salt-independent states in Hippel's review.

RC (1-14): The bottom left para on pg 3881 on Wang is similar to some of the proposed approaches to parameter estimation made in the document I uploaded below, where we propose using equilibrium data (t=infty) to get processivity parameters and then use time series data (other t) like that you have obtained so far to estimate other parameters. Please pass this info to Karthik.

It will still be useful to know if there are some universal conditions under which processivity is measured, vis-a-vis the comments in the notes below on the different dependence of processivity on reaction conditions predicted by different models.

I will read Raj's latest post in details later today and tomorrow. Now just one thing I want to bring to our attention: using the current data by Sudha and me, the bireactant curve fitting would not work well no matter what model or software we use. The problem is that the two sets of data do not agree with each other quite well due to difference in experimental conditions. Below is the xls file I sent to Raj last week. If you open it and turn to sheet '65C Prism', look at the table in blue. If you go through row 53, you see the initial rate increases along with [SP], so in cell J53 we expect to have a number >146. If you go down through column J, you also see the initial rates increases with [N], so at cell J53 we expect to have a number between 62 and 72. This shows how the two group of results can not be integrated, although I have done all the possible adjustment to compensate for the difference in experimental condition.

bireactant model.xls

This is a common problem with data at all 4 temperature points. This problem can not be solved by changing the model or manipulating the data. If we really want to use the bireactant model to get good results, I'm afraid we will need to repeat Sudha's part with new protocol.

RC (1/14): Ok, this could be done at one temperature to start, after the discussion with Karthik on the priorities for next steps.

RC 1/13/14

polymerase kinetic models and parameter estimation results.docx

Attached is a polymerase modeling and parameter fitting summary that shows

how to get rate constants from processivity data, under different assumptions.

I have asked Karthik to consider simulating the simplest model (no translocation)

and comparing the results to your experimental time series data. In the meantime,

it will be helpful to get data on rate constants for the various steps in the full models

presented in the literature in order to determine the validity of the assumptions in the various models

we are considering in these notes. Also, we would like to get more information on the conditions

under which processivity is determined, because according to the different models considered,

processivity will depend on different parameters, as discussed in the notes. Hence, understanding

from the literature what factors processivity depends on will help us choose a model.

It may be necessary for you to work together with Karthik

to modify the models until you find the minimal model that fits your data. After you have provided the requested

information, I may ask you to start working together on this.

Here are some general comments:

1) The assumptions are used to justify various possible simplifications of

the full reaction scheme for polymerase extension, for example due to certain steps being

much faster than others or the steady state assumption for the nucleotide addition step being invoked.

Even so, none of the models presented account for all the steps in

extension. Recent work on polymerase mechanisms use around 6 reversible

steps in the full reaction scheme.

2) For PCR applications, we care most about the ability to predict the

extension time at any given temperature. Any aspect of the model that

does not affect this time significantly can be ignored.

Also, the following experimental methods and estimation techniques are

practical to apply in rapid thermostable polymerase characterization for

PCR modeling:

a) steady state kinetics (initial rates)

b) processivity

c) parameter estimation using time series data

Of these, we would only consider doing a,c) ourselves.

It is not very practical to do (pre-steady state and burst) kinetics

experiments that are done by labs that specialize in polymerases, when

characterizing various

thermostable polymerases at different temperatures for PCR applications.

3) The simplest model is the one you also considered without

translocation. There, we can use the method described in the notes to get

the dissociation rate constant

at 72C based on processivity data. KM will do this first. He can get

the binding rate constant as well using Datta and Licata's Keq(T). The MM

kinetics for this model shows that Keq(T) for polymerase binding is one Km

that can be obtained from fitting the MM data. For this model it will help to know the conditions under which processivity is measured. For example, does

processivity depend on [N]? The simplest model in the notes indicates that it does depend on [N].

4) If the model 3) does not predict the data well, it may be due to

various assumptions made. More complicated models can be considered. One

of these is the model with translocation. Here, as mentioned in the notes,

there is some ambiguity as to whether polymerase dissociation can occur

during translocation. The model I presented assumes that it can, and that

translocation occurs much faster than nucleotide addition. In this case,

processivity does not depend on [N]. Also, the dissociation rate constant

is not the same one that would follow from Datta and Licata's experiments,

since their experiments did not consider translocation. As shown the Km's

from the MM model have different interpretations in this model. This model

could also be tested against the data. Other, more complicated models can

be considered based on the same principles presented.

Note that the model I presented with translocation was based in part on von Hippel's commentary

on salt dependence of processivity. Based on the latest literature, this may not be correct.

But this model introduces some principles regarding how assumptions regarding certain steps

in the reaction mechanism being much faster than others can lead to model simplification.

This is related to the issue of the relative magnitudes of the rate constants in the full extension models

presented in the literature.

6) One concern I have with some of the simple models is the assumption that steady-state kinetics applies

throughout the reaction for nucleotide addition. This may be source of errors in the models. That assumption could be relaxed in the extension

paper, if the estimation methods suggested are used.

*7) If the extension paper is to focus on building and testing predictive models for extension time

calculations, it will be necessary to consider more than one model and

numerically implement several estimation schemes. These estimation schemes

would involve MM model fitting, as well as the use of time series data for parameter estimation, as discussed in the notes. The models would need

to be simulated and the predictions compared to experimental time series

data until a suitably accurate minimal model is found. It would be

necessary for two team members to work together on these issues. Some of the underlying principles have been provided in the notes.

I may post a full version of the notes above including more details on how the results were obtained shortly. These details are not required for the proposed

work at this time.

Raj

CJ 1/10/14

Regarding your comments on 1/6/14:

1. I'm not sure what did you refer to by saying 'some of the literature (eg., Benkovic, Johnson)'. Would you please attached the two papers you mentioned? I found Kenneth A. Johnson's 1993 review on DNA polymerase conformational coupling. However, I did not see him mentioning 'dissociation is assumed to occur prior to nucleotide addition'.

RC: I was referring to the assumption that enzyme dissociation occurs as the reverse reaction of enzyme binding to the primer template complex in the first step of the polymerase reaction scheme, but is not considered to occur during translocation.

2. There are some serious mistakes in Peter H. Von Hippel's review 'on the processivity of polymerases'. In equation (5) he said the nT is defined before. But actually it should not be the nT defined before. Instead nT in equation (5) should be total density of all bands. The paper by Yan Wang on NAR 2014 cited Hippel's review but corrected this mistake. Also on Hippel's review, page 129, the last paragraph in main text, he said that 'Polymerases of different types may react differently to template "obstructions" that are characterized by high PI values'. This statement seems to be wrong. The obstructions on template should be characterized by low PI values. With these critical problems I would be very cautious with any conclusion in this paper.

3. The Hippel's review also cited a lot of unpublished results (especially for the salt-dependency studies) from his own group, making it very difficult to verify those conclusion. Considering both 2 and 3, I'm not sure how reliable this review is.

4. Below I'm posting three original paper published in 2013. There are a lot of things going on since Hippel's 1994 review. Hopefully these new papers would bring some inspirations.

ja403640b.pdf

bi400803v.pdf

ja311603r.pdf

Regarding your comments today:

1. I will look into the different rate constant of different DNA polymerases. One thing to clarify: are we only interested in those polymerases commonly used in PCR, right? There are also a lot of studies on DNA polymerases in human or viruses that are not used for PCR, do we also want to include them?

RC: If data for PCR polymerase is available it's fine, but since the only data that was presented to me in the past regarding enzyme binding and dissociation rates were for non-PCR polymerases, I didn't know whether PCR polymerases were as exhaustively characterized kinetically.

2. In Prism it is doable to input user-defined equation. I tried it a little bit but found it takes some time to debug. But if we really need to deal with it, I can try more.

RC: It will be important to determine if this is possible and if so how difficult, in the near future, so we can decide whether to use this software or not.

CJ: We can use this software.

CJ (12/27/2013)

Attached below is the reference you are looking for. I will also read it.

j.1749-6632.1994.tb52803.x.pdf

About the processivity assay, it is not hard, but it really relies on DNA sequencer, or CE with fluorescence detector. Basically, they label primers with fluorescent dyes, and run extension. Then the extended products are loaded to DNA sequencer. DNA sequencer for Sanger sequencing basically runs capillary electrophoresis and then detects products based on their fluorescence. In this way they can know the concentration distribution of extension product at different lengths (the concentration is measured by fluorescence). Compared to PAGE gel, CE is more accurate for quantification and more sensitive (which means low detection limit and also it can distinguish two strands with one single nucleotide difference).

RC (1-6): I would like you to look into an issue regarding the mechanism of polymerase dissociation from the primer-template complex. There appears to be some ambiguities in the literature regarding when the polymerase dissociates. In some of the literature (e.g., Benkovic, Johnson) including some references cited in the above paper, dissociation is assumed to occur prior to nucleotide addition. In the above review, it is stated that polymerase dissociation that is responsible for processivity is highly dependent on salt concentration, since electrostatic interactions are primarily responsible for maintaining template binding during the translocation step of polymerization that occurs after nucleotide addition, when the polymerase moves to the next position on the template. This seems to imply that most of the dissociation occurs during the translocation step. However, dissociation during translocation does not appear to be addressed at all in the earlier literature. Please investigate whether any other literature has mentioned dissociation during the translocation step. Also, please check the standard conditions (concentrations) under which processivity is measured. For example, are measurements always made under saturating nucleotide concentrations? And at a specific salt concentration? Please also make a list of all known rate constants (including translocation rates) and processivities of a few well-studied polymerases and post to the wiki. At this time, please investigate these questions only as we have various other modeling efforts underway that address other issues. I will provide a detailed document summarizing the modeling efforts shortly.

t7 polymerase mechanism.pdf

RC (1-10): I have prepared a summary of the modified MM theory that accommodates various possible kinetic schemes - including translocation/dissociation during translocation and omitting a treatment of translocation. There is also a discussion of how available processivity data can be used in our fitting. I will be posting that by Mon. The rate constants for the various steps for a couple of polymerases will be helpful in choosing among the kinetic schemes. Hence in this document I will not be specifying one particular scheme to use, but rather presenting various options.

It will also be helpful to know whether entering an arbitrary MM multivariable model equation into Prism is very difficult.

RC(12/16): Please read the methods section of the attached paper and comment on how difficult it would be to do this processivity assay in our lab. Also, please look up and post reference 27 (von Hippel).

processivity.pdf

CJ 12/19/13

Here I'm attaching 7 xls files. The first one is a summary of fitted dsDNA concentration at all temperatures, all [N]0, and all time points. Note that [E]0 and [N]0 vary from one temperature to another, as indicated in each sheet.

121913 fitted curve summary.xls

Below are 6 xls files of the fitted concentration vs. experimental data, as well as the information of the curve fitting, and fitted data at more time points, each file for one temperature.

The file for 75C is slightly different with what I posted last time. I corrected some errors during copy and paste.

121913 55C fitted curve.xls

121913 50C fitted curve.xls

121813 70C fitted curve.xls

121813 65C fitted curve.xls

121813 60C fitted curve.xls

121713 75C fitted curve.xls

CJ 12/18/13

Attached below are two files with XY values of fitted time course for all dNTP concentrations at 75C. The pdf file is a summary of fitted values compared to experimental values at 12 experimental time points. The spread sheet includes full results at 1000 time points between 0-10min. The xls file also includes curve fitting parameters and raw data in terms of RFU versus minutes. In the pdf file the units are converted to nM versus seconds using calibration curve.

121713 75C fitted curve.pdf

121713 75C fitted curve.xls

I will work on other temperatures in the following two days.

CJ (12/17)

Sorry for the missing attachment. I'm attaching the spread sheet here.

Keq for Raj.xls

CJ (12/16):

Just want to clarify on three things:

(1) By saying 'Concentration values from fitted curve ...', you are referring to concentration of what? Total dsDNA? Anything else?

RC: ds nucleotide since that is what is being measured.

(2) You want this concentration values for all temperatures, all dNTP concentrations, and all time points? That means 6*12*12 = 864 numbers in 72 curves. If so, please allow me some time (2-3 days) to finish them.

RC: All times for the highest few temperatures (e.g. 65,70,75) for 1000uM would be needed. This can wait for 1-2 days.

(3) By saying 'Keq for enzyme binding', are you referring to dissociation constant of enzyme with template (Keq,1)? or enzyme with dNTP (Kn)? The former is taken from Datta and LiCata paper and I have sent them to you on Friday by email. The latter is reported in the manuscript.

In case you did not get my email on Friday, I'm attaching the Datta and LiCata paper here:

Thermodynamics of the binding of Thermus.pdf

RC: Keq,1. The email from Fri said "Attached is a spread sheet with Datta and Licata's Kep data. " but there was no attachment.

You mentioned you extrapolated some values.

Currently I'm working on the sequencing results of the beta-lactamase library. I will come up with a report by tomorrow. After that is done, and after all the points here are clarified, I will come back to the paper.

RC (12/16):

Chaoran,

Please provide the following, the first three of which I need for some calculations I am doing:

- Concentration values from fitted curve at each time point for all curves in your ppts. You can repost all your ppts on the wiki with these values indicated.

- Any info on the fitting provided by prism.

- Keq for enzyme binding at each temperature

CJ 12/11/2013

Regarding Raj's comemnts:

RC:Is the time series data with fitting at a particular temperature available in the manuscript for 1000uM? If not please post it here. Please also tell me the concentration of nucleotide at the last time point at a particular temperature (say 72 C).

CJ: Please see a report in the attached slides.

121113 for Raj.ppt

(I updated the attachment.)

RC: I mean whether the aforementioned rate measurement can be made in real time during a real-time PCR reaction. I would assume this is difficult. As you can see from the equations presented, it is not just a matter of total dNTP incorporation after each cycle.

CJ: I agree with you.

RC: If we don't include the simulation we will need to move some of the MM derivations into the body. This will be the next step after the issues above are settled. After these changes the paper should be put in journal format (probably NAR), even if we choose to later put in simulation content. What are your thoughts on content vis-a-vis NAR standards if the simulation content is removed. I will also consider this.

CJ: I see. For NAR, I feel that we'd better add some simulation work to match its high profile (IF 8.28).

CJ 12/03/2013

Attached below are four files:

(1) a near-final manuscript with track change.

Taq Paper CJ 11272013.doc

(2) a draft of Supporting Information. I temporarily moved the simulation work in SI.

Taq Paper SI CJ 120213.doc

(3) my updated comments.

CJ comments 11272013.doc

(4) a sample paper on NAR.

Thermodynamics of the binding of Thermus.pdf

RC: I am copying the remaining two questions here:

8) Can accurate fluorescence measurements be made under pseudo first order conditions (higher [N] excess)? If these conditions cannot be not used, we must use numerical simulations to make the predictions.

RC: The ratio of [N] to [SP]_0 is relevant. In early stages of PCR, the template concentration is lower, I think, than that used in our experiments. With higher template concentration (lower ratio), nucleotide depletes more quickly. I am curious about the scope for changing this ratio from an experimental signal-to-noise standpoint. The ratio is low enough in the current experiment that the reaction cannot be considered pseudo-first order in the E.Di, since [N] clearly changes (see below) during the time over which measurements are made. The measurements in the current experiments also appear to be made sufficiently early such that the \sum_i=0^(n-1) [E.Di] remains roughly constant, as evidenced by the first order kinetics in [N], whereas we are interested in measurements at later times when a lot of full-length DNA is being formed.

CJ: I’m still not quite clear on what you want me to provide here. Again, I don’t see any reason why high [N] would make the measurement inaccurate. I have never observed a deterioration in accuracy at high [N] (up to 1000uM) in experiments, either.

RC: So you believe that [N]_0 can be raised sufficiently high that [N](t) is approximately = [N]_0 even when most of the [SP]_0 has been converted to DNA, without compromising the measurements. If so, you should provide the maximum [N]_0 that you feel would be viable, and then indicate the [N] when all [SP]_0 has been converted to DNA. Based on this I can assess the accuracy of the pseudo-first order approximation and hence the suitability of the simulation method to the experimental setup.

CJ 12/11/13: the highest [N]0 in our experiment is 1000uM. The [N] consumed in the extension is 0.2uM X 63 = 12.6uM (0.2uM is [SP]0; 63 is the length of ssDNA in SP complex). So by the end of the reaction only 1.3% of the [N] is consumed. Under such condition I did not observe any deterioration in data quality.

RC: Ok, this could be a suitable pseudo-first order condition, though we may increase [N] further beyond this to improve the approximation. I assume a 5-10x increase in [N]_0 would be ok. Is the time series data with fitting at a particular temperature available in the manuscript for 1000uM? If not please post it here. Please also tell me the concentration of nucleotide at the last time point at a particular temperature (say 72 C).

9) A method for determining concentration of fully extended DNA at any time based on solution phase fluorescence measurements is proposed. It based on a rate measurement. Are these measurements inaccurate without a sufficiently large number of measurements to obtain the slope. Would the required reaction conditions decrease signal to noise due to background fluorescence? Would the signal to noise during PCR cycles be too low to use this method to obtain the final DNA product concentration? If not, we may portray this as another application of our experimental work, since it could be used in PCR without model simulations

RC: The method described on pg 26 under comment RC23 (where this comment is taken from). It is based on rate measurement under conditions of sufficiently high nucleotide excess that the d/dt[N] approximately = 0, and where the sum of intermediate concentrations is not constant - unlike the current experimental protocol, which displays first order kinetics with respect to [N]. The protocol would otherwise be analogous to the rate measurements in the current experiments.

CJ: I am still not quite clear on exactly what is the ‘method for determining concentration of fully extended DNA at any time’. Do you mean the gel-based assay? Without running a real experiment, it is hard for me to predict whether the S/N of a gel-based assay would be good enough for monitor the procession of PCR or not.

RC: As described using equations in the manuscript, the method is to measure the rate of nucleotide incorporation. Since this rate is proportional to the sum of all [E.Di] concentrations, i<n, it allows us to determine \sum_i=0^n-1 [E.Di] at any time and hence by mass balance calculate DNA concentration [E.Dn], assuming we know kcat/Kn. This is a solution measurement of rate like the ones done for this paper, but we may want to do them under the pseudo-first order conditions mentioned above. Hence we need to know whether accurate rate measurements can be made under the conditions determined under 8).

How long would it take to do these experiments? I am also asking you to comment on whether these measurements can be made under standard PCR reaction conditions.

CJ 12/11/13: Our current protocol is quite labor-intensive. It takes two days to finish one set of experiments, which means measuring dNTP incorporation at 12 different time points, for a fixed extension temperature. Various reactant concentrations ([SP]0, [E]0, [N]0 etc.) can be assayed in one set of experiment. But there's a limit in the number of samples I can handle simultaneously. Currently I'm running 8 different [N]0 points in one batch, which is kind of the maximum. Would you specify how many time points, concentration points, and temperature points you would like to obtain, so that I can estimate the time needed.

RC: We would need only one [N]_0 and one temperature. The number of time points could in principle be smaller, since we do not need to fit a curve, but since we will be running fewer reactions we may in fact increase the number of time points. We may make some measurements at later times. Assuming for the time being that the number of time points is the same, how long would the experiment take?

The data could be used in two ways: a) comparison of absolute [N] at a particular time to the model prediction; b) comparison of the [dsDNA] obtained from the rate measurement method above (not an initial rate measurement, but rate at the specified time) to the model prediction. In the latter case it will be necessary to obtain an accurate rate estimate at -any- specified time during the reaction.

I'm not quite sure about the term 'standard PCR reaction conditions' you used above. If you mean the enzyme, template, dNTP concentration etc, our current assay is quite comparable to standard PCR reaction conditions. Or do you mean you want to monitor the dNTP incorporation during a regular PCR program? If it is the later case, why don't we use the qPCR method, which is designed to measure dsDNA concentration in each cycle of PCR program.

RC: I mean whether the aforementioned rate measurement can be made in real time during a real-time PCR reaction. I would assume this is difficult. As you can see from the equations presented, it is not just a matter of total dNTP incorporation after each cycle.

What else remains to complete this version? I will let you know whether we will be retaining some of the simulation parts, after assessing the answers to the questions above.

If we do not include the simulations, we will probably move some of the SI MM derivations back into the paper, possibly in an Appendix, since no one generally reads Supporting Info.

CJ 12/11/13: It is mostly done. We just need to fix the simulation part. If we move the simulation back, we will need to adjust the abstract, background, discussion and conclusion part accordingly. If we decide not to include the simulation part, all I need to do is to proof read the manuscript and fit it into the NAS template for submitting.

RC: If we don't include the simulation we will need to move some of the MM derivations into the body. This will be the next step after the issues above are settled. After these changes the paper should be put in journal format (probably NAR), even if we choose to later put in simulation content. What are your thoughts on content vis-a-vis NAR standards if the simulation content is removed. I will also consider this.

CJ 11/25/2013

Attache here is the updated version of the Taq extension manuscript. I am also attaching a separate file to answer Raj's questions. I need to spend this afternoon and tomorrow morning to finalize the slides and prepare for the talk on group meeting. I will finish the rest of the revision after the group meeting.

Taq Paper CJ 11212013.doc

CJ comments 11252013.doc

RC: Some replies attached.

CJ comments 11252013_RC.doc

CJ 11/20/2013

Raj,

I read through you new MM model and have a question on Keq. Please see my comment on the attached file.

question.pdf

RC: Since the approach to calculation of Keq based on our own data would require us to use Sudha's data, I was not planning to apply that equation here (given the issues you raised with Sudha's data), but rather simply provide it to show that we have developed a methodology to do so. The contribution of our paper is a method for any polymerase as well as our data for Taq polymerase. (We made some arguments as to why we expect the effect of uncertainty in Keq provided by Datta - since it is not for Taq - to have a small effect on our calculated Kn, based on our chosen reaction conditions.)

RC (11-14):

Chaoran,

Attached below are my latest revisions to the extension paper draft.

I am summarizing here the next steps that you can work on. These are also listed as comments in the working draft:

1) Length: Some parts of introduction are too long. Compare current length to admissible journal paper length and reduce as appropriate. Note that I have added the MM derivations to the body of the paper, since it is useful to have a unified model on which our experiments as well as new experiments can be based.

The appendix contains supporting derivations that are not essential (it is not necessary to read all these at this time). They could be moved to supporting information/eliminated, or some can be summarized and incorporated into the body of the paper after we finish all other tasks.

2) We should redo the MM calculations using equation (6) for 1/v. The differences with respect to the original single reactants formulation originate in the expression for [E] in terms of [E]0 . The original formulation considered partitioning into two intermediates separately (not exact, since formation of the 2nd intermediate shifts the 1st equilibrium, to an extent that depends on the steady state concentration of the 2nd intermediate; here we of course assume the 2nd intermediate cannot dissociate directly to E + S1 + S2). The expression for 1/v changes as a result compared to the original single reactant formulation. The changes are:

a) A correction term 1/ (Keq,1*[SP]0) that was previously neglected

b) The value of [E]0 used - previously, I believe [E]0 (which was the [E.SP]0 in the bireactants formulation) was calculated using [E]0 = [E.SP]0=[E][SP]Keq.

The approximations previously made may have been valid esp due to high SP concentration– we will see. In any case, the current formulation is preferred since it does not make as many assumptions. Note that the standard sequential bireactants derivation makes a rapid equilibrium assumption that we cannot make in our work, since we do not want to equate Kn with an equilibrium constant.

Related, the current protocol does not clearly explain how the enzyme concentration was chosen – where is Datta and Licata cited?

3) I have made a comment regarding why one must be careful in setting up MM experiments to determine Keq for enzyme binding. You can decide whether to include this statement after considering it.

.

4) Related to 3), enzyme dissociation during extension may be related to polymerase processivity. CJ please look into processivity and comment.

5) In the results section, should there be any commentary on comparison to the fitting in the BP draft obtained from Innis et al data?

6) Overall editing of all sections except for simulation and robustness. Assume these sections and associated commentary in the discussion, conclusion, etc will not be included in the paper . Please aim to finalize the paper including all formatting so it can be submitted without those sections if needed. This includes finalization of conclusion.

7) Journal choice: Assume simulation content will not be included. Please check length and content and recommend a journal, including analysis of related papers in NAR.

The following comments/questions pertain to the experimental comparisons to the simulations that could be made. They are also provided as comments in the draft. Based on answers to these questions, I will decide whether it is worth finishing the unfinished simulation sections or leave them for another paper.

8) Can accurate fluorescence measurements be made under pseudo first order conditions (higher [N] excess)? If these conditions cannot be not used, we must use numerical simulations to make the predictions.

9) A method for determining concentration of fully extended DNA at any time based on solution phase fluorescence measurements is proposed. It based on a rate measurement. Are these measurements inaccurate without a sufficiently large number of measurements to obtain the slope. Would the required reaction conditions decrease signal to noise due to background fluorescence? Would the signal to noise during PCR cycles be too low to use this method to obtain the final DNA product concentration? If not, we may portray this as another application of our experimental work, since it could be used in PCR without model simulations

10) Please comment on other methods for the experimental measurement of fully extended DNA, both offline (e.g. gels) and online (e.g. probes).

11) Please use the expression [E.S1.S2]=Keq,1/Kn [E][S1][S2] to compute the total concentration of the nucleotide intermediate, according to the eqns provided. This is an application of Kn (not just kcat/Kn) and will help us determine whether omission of the intermediate can be justified in the modeling.

Thanks

Raj

Taq Paper CJ RC 11-14-13.doc

CJ 11/5/2013

Please see two sample papers attached, from Biochemistry (IF 3.4) and Analytical Biochemistry (IF 2.6), respectively.

analytical biochemistry sample.pdf

biochemistry sample.pdf

CJ 10/31/2013

Please see the attached file for the current version of the Taq extension paper, in track change mode. Compared to the last version I added two paragraphs in the Method section, and one paragraph and a figure on the temperature dependence analysis.

Taq Paper CJ 103113.doc

RC: Please provide some commentary below regarding suggested journals for this work in the event that we do not include substantial theoretical modeling (i.e., if the content is largely based on the current experimental analysis).

CJ: I suggest Analytical Biochemistry or Biochemistry.

RC: Please send the closest analogous paper you can find published in Biochemistry as an example along with impact factors for both the above journals.

CJ 10/30/2013

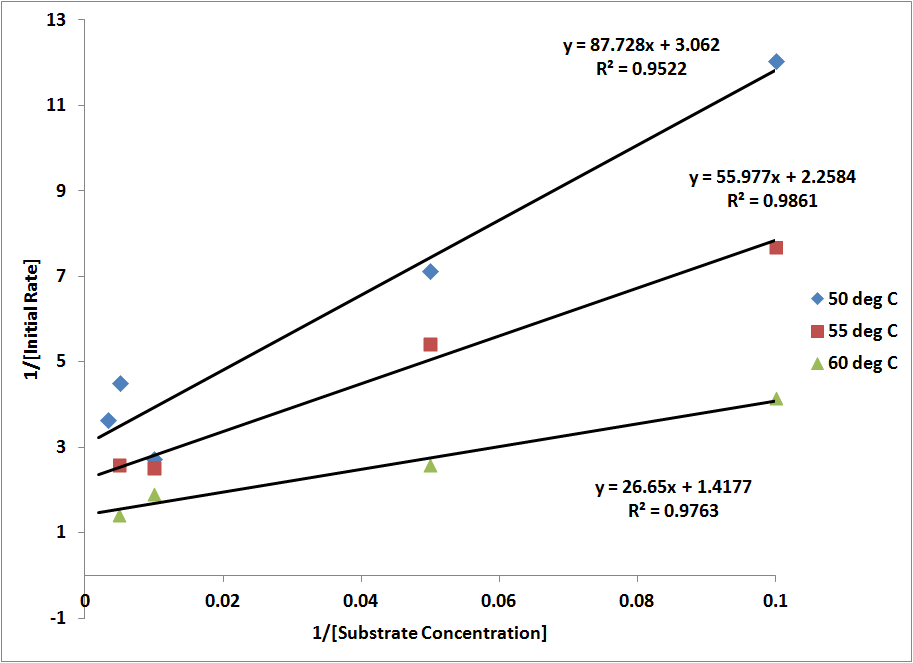

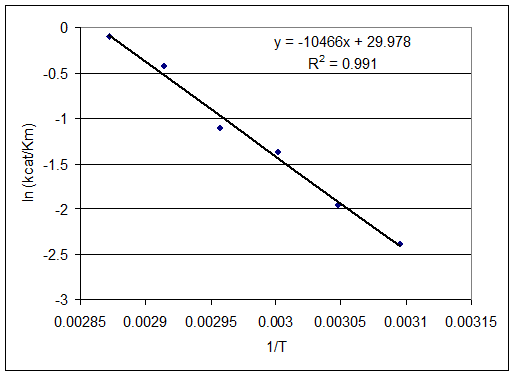

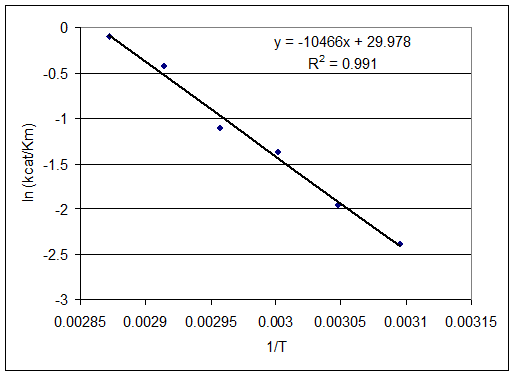

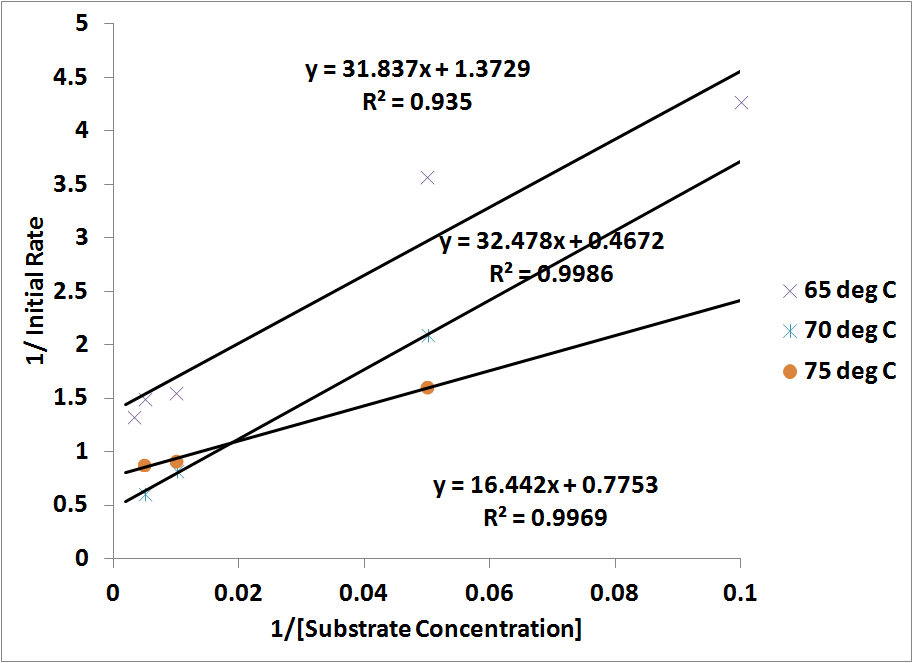

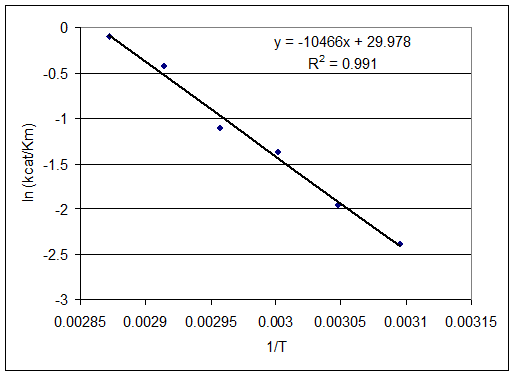

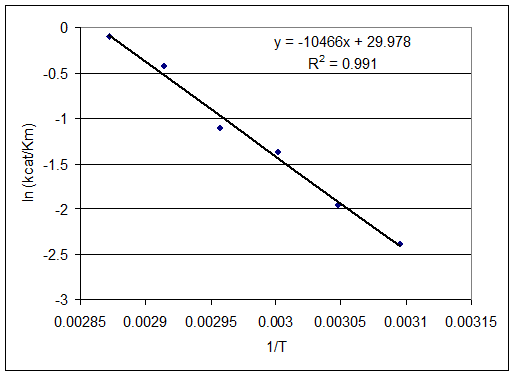

I checked Karthik's BP manuscript and found he plotted ln(kcat/Km) vs 1/T, instead of ln(kcat) vs 1/T. I tried the same thing and got the figure attached below:

ln(kcat/Km) vs 1/T shows a linear relationship. However, this is no longer an Arrhenius plotting, and therefore the slope, as far as I understand, can not be used to derive Ea. I'm having a hard time finding the biophysical significance to this plot. Any suggestion?

RC (10-30): Yes, that's what I mentioned in my last posting this morning (see below). As noted below kcat/Kn is a second order rate constant that treats the reaction as E + S -> E + P, i.e., neglecting the ES intermediate in the model. You are right that it may not be appropriate to equate Ea obtained from this plot with an activation energy associated with a particular transition state barrier. However, the Arrhenius model (with its assumptions of temperature independent parameters) is typically only an approximation to transition state theory anyway. Apparently some of the temperature-dependent deviation of kcat from the Arrhenius model is canceled by temperature dependent changes in Kn. We do not need to explicitly indicate a relation between the slope and activation energy. Nonetheless the fact that we can use a single Ea/k0 is good for modeling purposes. I will comment more after we have incorporated this into the draft.

CJ 10/29/2013

See my reply below.

a) What was the fate of Sudha's work on Km determination for the DNA? Did you eliminate this because of the protocol she used? If so, what were the issues? Was the reason that a lot of the original manuscript was deleted?

In a sequential binding model (which means the Taq binds to template first, followed by dNTP), the Km measured in Sudha's way does not solely reflect binding affinity with the first substrate. It is usually reported as Km(app). Please see the figure below for a derivation on how Km(app) is different from real Km.

CJ (10-30) The figures were messed up. So I'm attaching the Word doc for a discussion on Km.

Km in sequential binding model.doc

The reason I deleted her figure is: (1) it's not easy to explain the biological relevance of Km(app). (2) Km for Taq and template has been reported using 'direct' assay like fluorescence anisotropy (Datta and Licatta). It is hard to explain why our method is better than theirs. and (3) the general protocol Sudha used for that set of data has been modified later, in terms of enzyme concentration, Mg concentration, time points and temperature points etc. Then we need to either explain why we use different protocols for template and dNTPs, or redo the whole set of experiments using the same protocol as for dNTPs. Either one would not be an easy task.

However, her Km values might still be worth reporting. I'm considering making it into the Supplementary Information.

RC (10-29): Yes, please consider it and update. In the meantime I am looking over your analysis above.

CJ (10-30): Please see the file attached.

Taq Paper SI CJ 103013.doc

RC (10-3): I think my comments from today were deleted. Yes, we considered this when we changed the MM protocol. Comments on this and how it should be presented will be forthcoming.

b) Where (what page) did you include the discussion about nucleotide inhibition?

After adjusting dNTP concentration, I did not see dNTP inhibition anymore. Therefor I find no need to discuss it. A discussion on dNTP concentration is in the last paragraph in Discussion section.

RC (10-29): If I understand correctly, you adjusted dNTP concentration because of inhibition and based on the known mechanism of inhibition through Mg chelation. Do you feel that emphasizing this would call into question the results/protocol?

CJ (10-30): Sorry for the confusion. I should say 'after adjusting Mg concentration'.

c) The robustness analysis (if included) will be done using the method different from (and perhaps shorter than) that you have included in the draft. I will revise that section. At the same time, I will decide on the content of the simulation section. How much of this we choose to include will affect the choice of journal.

I see.

d) What are some example journals you referring to when you indicate a 7000 word limit?

I used to publish on JACS, ACS chemical biology and Acc. Chem. Res.. They all have word limit of 6000 - 6500 for articles. I can check for the specific requirement once we decide on what journal it would go to

RC (10-29): Can you compare to the length requirements of journals like Nucleic Acids Research, Biophysical Journal, and PLOS Computational Biology (XG has info on some of these)? The latter are unlikely choices, but may be appropriate if we choose to include more simulations. Some of those may allow for longer papers. Do you feel any of the journals you mentioned are appropriate for this work?

CJ (10-30): Nucleic Acid Research charges excessive page fee ($195 per page) for papers over 9 pages (corresponding to ~7500 words). Biophysical Journal has a page limit of 10 pages, corresponding to 8000~8500 words. I have not found page limit information for PLOS Computational Biology yet. If we decide to submit to this journal I can email their editorial board to ask.

e) You did not appear to consider an Arrhenius model for extension rates as a function of temperature (i.e., a figure that examines whether a constant preexponential factor and activation energy can accurately predict the temperature variation of extension rates). Is this something you plan to add?

I used to ask this question in the previous round of revision, but got no answer.. Therefore I thought we were not interested in it. However I can try to add it. One thing we need to keep in mind is that enzymatic reaction may not fit well in Arrhenius model. In classic Arrhenius model, reaction rate simply increase with temperature. But for enzymes, reaction rate will peak at optimal temperature. If we do report the

Arrhenius thing, we will need to explain why it is significant.

RC (10-29): Even if we can apply a different Arrhenius model to say, two different temperature ranges, it is ok and useful for simulation. You can try to fit the model separately over 2-3 temperature ranges. In some cases we have found a good fit with multiple Arrhenius models when the reaction rate peaks at a particular temperature.

Significance/motivation: For computational modeling and optimization of PCR, it is necessary to have a model for the temperature dependence of all reaction rate constants. Knowing the rate constants at a discrete set of temperatures (such as those considered in the lab) is not sufficient since the optimization algorithm may vary the temperature and reaction time continuously. For example, since extension occurs even during the annealing step of PCR, we may need to know the extension rate constant at all possible annealing temperatures so that the optimal annealing temperature and time can be set. Arrhenius models are approximations, but they are convenient because of their use of just two parameters (preexponential factor, activation energy). We could fit various other nonlinear functions, but we should first examine the results with the simplest Arrhenius model. The goal is to show that such models for temperature variation of the rate constants are suitably accurate for the purpose of modeling and optimization.

You can talk to Ping about this, just for background on what we did previously with fitting of models for the temperature variation of rate constants. He is familiar with the work I mentioned and has the drafts (Biophysical J paper). This would help you outline this section. In return, I also asked Ping to talk to you about Datta and LiCata (enzyme binding rate constants). In the BP paper draft we may have a figure for variation of the second order extension rate constant kcat/Kn with temperature (an approximate model based on several assumptions and literature data, since we did not have the necessary experimental data at that time).

CJ (10-30): Please see the attached figure. Based on Arrhenius equation, if we plot lnk vs 1/T, the slope of the curve is -Ea/R. As you can see in the figure, lnk vs 1/T does not show a linear relationship. I'm not quite comfortable to use the multiple Arrhenius model because we only have 6 temperature points, barely enough to fit one model. If we want to estimate reaction rate at other temperatures, I suppose the best way is to fit all 6 data points into a nonlinear model, rather than 2 or more linear models. Another way is to do interpolation and extrapolation rather than curve fitting. What would you suggest?

RC (10-30): Such a figure should certainly be included in the paper. I should have been more specific; what we need for the purpose of modeling is the analogous plot for the second order rate constant kcat/Kn (ratio), since we do not model the intermediate. As mentioned above, we can fit other nonlinear functions (or use interpolation schemes) now that we have seen the data do not fit well to a linear model, but please discuss with PL first and get the plot we currently have in the BP paper (if it's there) and then we will decide on the approach (which approach chosen has implications for the modeling). In some cases we used two linear models if there was a good fit to the linear models with constant Ea,k0 in separate temperature ranges (e.g. when there was a temperature of maximal activity; here, we see maximal activity at the highest temperature you used, so that is not relevant). I agree regarding the current number of data points being limited in this case (have you sampled all temperatures you intend to)? (If we want more, we should discuss - it may be possible to get the data we need regarding kcat/Kn more rapidly.)

-- Robustness experiments. I've read your comments below. Here are some further details on how the experiments used for model validation (as opposed to MM parameter fitting) may differ. This list is in order of priority. Bear in mind that we may not do include the results of all such experiments for this paper (we may put them in a second paper instead).

a) We need to quantify the standard error of measurements of total DNA concentration for a known total concentration of DNA. This is because the difference between the model predicted total DNA concentration, given an estimated value of kcat/Kn, and the measured total DNA concentration is attributable to both the error in the model prediction (due to e.g. error in the kcat/Kn estimate) and the measurement error, and we are interested in the former.

I see. I will try to do some error analysis based on our current data set.

RC (10-29): Ideally this would be done with a known concentration of total incorporated nucleotide or DNA.

CJ (10-30): I'm a little bit confused on your terminology: you said 'We need to quantify the standard error of measurements of total DNA concentration for a known total concentration of DNA'. What is the difference between 'total DNA concentration' and 'total concentration of DNA'? My understand to 'concentration of total incorporated nucleotide or DNA' is that this is what we measured on the fluorometer, am I right?

RC (10-30): Same thing - just making sure the concentration is known so we isolate measurement error. To be precise, here and elsewhere I am referring to the fluorescence measurement of total ds nucleotide concentration.

b) Regarding the protocol suggested below, it is good to see that the conditions are similar to those in PCR. Please indicate how your lowest [dNTP] compare to those used in the later cycles of PCR (where nucleotide gets depleted). What are some characteristic values for the latter? The model can be used to predict incorporated [dNTP] under non pseudo-first order conditions as well, but we should first verify whether those are relevant.

Our lowest [dNTP] is 2uM. At this low concentration we can barely detect positive reactions (the value of initial rate is usually smaller than standard error). In late stage of PCR when dNTP is depleted, the dNTP concentration might be even lower, and the reaction rate is close to zero. As a result, I don't think it is very useful to study the kinetics under dNTP depletion. People are more interested in the initial and log phase of the reaction.

RC (10-29): It would still be useful to provide info on the characteristic [dNTP] at different cycles/stage of PCR since we ultimately will be modeling the PCR reaction (and providing prescriptions for the optimal temperature protocol) during every cycle (at least in other work). (It is interesting that you observe the reaction rate fall close to zero when [dNTP] is not in significant excess, and that you do not observe a regime where the reaction is second order (dNTP, template).)

CJ (10-30): If we want to know the dNTP concentration during each cycle, what we need to to is to run a series of PCR reactions, stopped at various cycles; measure the amount of incorporated dNTP and then calculate how much dNTP is left in the buffer.

Did you find that the first-order kinetic model fit the data equally well at your lowest vs highest [dNTP], indicating that a pseudo-first order approximation was appropriate at all [dNTP] concentrations?

At low [dNTP] and/or low temperature the first-order kinetics degenerates into zero-order: RFU vs. time shows a linear relationship. This makes sense because under these unfavorable conditions the reaction rate is so low that we can not sample all the way to the plateau stage within 10min.

While we may not change the protocol given that it suits PCR, we may want take more measurements of total DNA concentration at particular times to get a better estimate of the experimental uncertainty when comparing to model predictions.

I see. One thing is that the current protocols is quite time- and reagent-consuming, as well as labor-intensive, making it unrealistic to sample plenty of time points. I'm thinking of using a real-time PCR protocol to do this. That means we add the dye into the reaction and monitor the fluorescence over time. As discussed in the paper, this will introduce some complexity, eg, the dye may inhibit the enzyme. However, this is still worth studying because this is exactly what happens in qPCR application.

This is just some initial idea. If we insists sticking with the current end-point detection protocol, I'm ok with it. It just means we will need to spend a lot of time and reagents to get these data.

RC (10-29): We wouldn't need to repeat most of the previous experiments with more measurements at each time point. This could be done for only a few time points for one or two reaction conditions, since we would be making computational predictions at particular times.

CJ (10-30): My only concern is the day-to-day variation. Sudha used to observed that the exactly same reaction, run in 2012 and 2013, showed very different RFU values. This variation is not a big problem in our previous set of experiments because we ultimately use dRFU/dt rather than the absolute values of RFU. But for the experiments you suggested above, I think we need to make sure the results from our old data set is compatible with those from a new set of expt.

c) Though the current measurements can give us the total concentration of incorporated nucleotides, in order to determine the total concentration of fully extended DNA at a particular time (which the theoretical model can predict and which is of greatest interest in PCR), we may need to run a gel and extract the fully extended product. Please provide comments on how difficult this would be and the associated measurement error of the fully extended DNA concentration (esp how it compares to the fluorescence measurement error of the total incorporated dNTP concentration).

It is doable. But since detection of DNA on gel is also based on fluorescence, I don't see any reason why gel-based assay could be significantly more accurate compared to solution-based assay. The detector for solution-based assay is a fluorescence spectrometer; while for the gel-based assay it is a camera. My intuition is that the former would be more sensitive and accurate. The major advantage of a gel-based assay, in my opinion, would be the resolution of product of different length, if we are interested in. By the way, gel-based detection assay will be more expensive, time-consuming, and label intensive compared to solution-based assay.

RC (10-29): Yes I agree - I meant that I assume the accuracy of the gel-based measurement will be lower than that of solution phase measurement, and that is why I am curious whether it will be accurate enough for our purposes. It would certainly be much more time consuming. Yes, resolution of product length (esp fully extended) is the goal.

CJ (10-30): This is something we need to try by experiments. If the gel does not work, we may consider EC, which is claimed to be more sensitive and accurate.

d) Ideally, because the rate of nucleotide addition for the last dNTP added is different than that of all other dNTPs, it is good to use a long template, especially if we are making predictions of total fluorescence at later times. However, since we did not consider this to be an issue for MM kinetics, we will ignore it here as well and it is not a priority to work with a new template for robustness analysis.

I agree. A long ssDNA may be easier to form 2nd structure. Also, chemical synthesis of oligos >90nt is not quite practical. Some companies claim they can synthesize oligos of ~100nt, but I would be very cautious with the quality of their products.

RC 10/28/2013

Thanks for the revised draft, I've looked over it and have some comments on the revisions as well as the next step for experiments (should we choose to proceed with them at this time):

-- Manuscript. Please comment on the following:

a) What was the fate of Sudha's work on Km determination for the DNA? Did you eliminate this because of the protocol she used? If so, what were the issues? Was the reason that a lot of the original manuscript was deleted?

b) Where (what page) did you include the discussion about nucleotide inhibition?

c) The robustness analysis (if included) will be done using the method different from (and perhaps shorter than) that you have included in the draft. I will revise that section. At the same time, I will decide on the content of the simulation section. How much of this we choose to include will affect the choice of journal.

d) What are some example journals you referring to when you indicate a 7000 word limit?

e) You did not appear to consider an Arrhenius model for extension rates as a function of temperature (i.e., a figure that examines whether a constant preexponential factor and activation energy can accurately predict the temperature variation of extension rates). Is this something you plan to add?

-- Robustness experiments. I've read your comments below. Here are some further details on how the experiments used for model validation (as opposed to MM parameter fitting) may differ. This list is in order of priority. Bear in mind that we may not do include the results of all such experiments for this paper (we may put them in a second paper instead).

a) We need to quantify the standard error of measurements of total DNA concentration for a known total concentration of DNA. This is because the difference between the model predicted total DNA concentration, given an estimated value of kcat/Kn, and the measured total DNA concentration is attributable to both the error in the model prediction (due to e.g. error in the kcat/Kn estimate) and the measurement error, and we are interested in the former.

b) Regarding the protocol suggested below, it is good to see that the conditions are similar to those in PCR. Please indicate how your lowest [dNTP] compare to those used in the later cycles of PCR (where nucleotide gets depleted). What are some characteristic values for the latter? The model can be used to predict incorporated [dNTP] under non pseudo-first order conditions as well, but we should first verify whether those are relevant.

Did you find that the first-order kinetic model fit the data equally well at your lowest vs highest [dNTP], indicating that a pseudo-first order approximation was appropriate at all [dNTP] concentrations?

While we may not change the protocol given that it suits PCR, we may want take more measurements of total DNA concentration at particular times to get a better estimate of the experimental uncertainty when comparing to model predictions.

c) Though the current measurements can give us the total concentration of incorporated nucleotides, in order to determine the total concentration of fully extended DNA at a particular time (which the theoretical model can predict and which is of greatest interest in PCR), we may need to run a gel and extract the fully extended product. Please provide comments on how difficult this would be and the associated measurement error of the fully extended DNA concentration (esp how it compares to the fluorescence measurement error of the total incorporated dNTP concentration).

d) Ideally, because the rate of nucleotide addition for the last dNTP added is different than that of all other dNTPs, it is good to use a long template, especially if we are making predictions of total fluorescence at later times. However, since we did not consider this to be an issue for MM kinetics, we will ignore it here as well and it is not a priority to work with a new template for robustness analysis.

CJ 10/25/2013

Attached please find the extension kinetics manuscript, revised based on our new plan and new data. Notably, most journals have a word limit of <7000 words for articles. The previous version of manuscript has >9000 words, and we need to add a lot of work on simulation. Therefore I trimmed the background and discussion section, making them more compact and concise. I have left comments in the file to indicate where to fill in the theoretical work. I am also attaching a track change version in case you want to know what changes did I make to the old manuscript.

Taq Paper CJ 102513.doc

Taq Paper CJ 102513 track change.doc

Raj, regarding your comment, I would like to get clarified on what difference we want to make in the next step. You want to measure [total DNA (and partially extended primer-template) concentration as a function of time starting with the addition of nucleotide to pre-annealed and enzyme-bound primer-template (see below), under conditions of significant nucleotide excess as is common in the initial cycles of PCR (in PCR extension the enzyme and primer are also in excess in the initial cycles)] Actually this is exactly what we have been doing: we measure dsDNA extension as a function of time; we use pre-annealed and enzyme-bound primer-template; and we use excessive dNTPs (eg. 200 - 1000 uM) for some groups of experiments. You also mentioned [The protocol would differ from the MM kinetics protocol in that we are no longer interested in just the initial rate.] As a matter of fact, in many groups (high dNTP, high temperature) of our previous expts, the reaction has reached plateau, not just in in the initial stage (see a representative figure below). I also want to mention that conditions in our previous experiment, in terms of template and enzyme concentration, Mg concentration, dNTP concentration etc., are quite comparable to what in real world PCR application. The only significant difference is the extension time: usually it is 1min for 1kb, but we do 10min for 80bp. Overall, I am not quite clear on what kind of protocol you want me to design for the next step. Would you possibly clarify on what is the purpose for this project and how it is different from the previous one?

The figure below is a representative reaction curve that proceeded well beyond the initial stage, measure at 65 C, 1000uM dNTP.

RC (10-23): Thanks. After the completion of MM extension experiments and experimental manuscript section, the knowledge transfer on the b-lactamase project, and the literature review, etc on the diagnostics projects, you should consider preparation of a protocol for measurement of total DNA (and partially extended primer-template) concentration as a function of time starting with the addition of nucleotide to pre-annealed and enzyme-bound primer-template (see below), under conditions of significant nucleotide excess as is common in the initial cycles of PCR (in PCR extension the enzyme and primer are also in excess in the initial cycles). The protocol would differ from the MM kinetics protocol in that we are no longer interested in just the initial rate. However, as in the MM protocol, we do not want to consider the time course of primer annealing and enzyme binding to primer-template hybrid. We would like to record the total fluorescence and its standard error at regular sampling intervals. These results would probably not go in the current manuscript, but we may write a follow up manuscript that uses them.

CJ 10/21/2013

Attached please see a summary of the extension experiments I've been running so far. These data will be integrated into the extension kinetics manuscript, which I'm currently working on.

102113 CJ report.ppt

RC (10-2): Thanks for the recent updates. As mentioned below, we can keep revising the experimental parts of the extension paper draft in the meantime.

I have worked on the theory parts of the extension paper including the robustness analysis. I will discuss with you after the above are further along.

We will also have to come to a judgment regarding whether we want to run any extension reactions under PCR conditions in order to check the predictions/robustness

of the model based on the MM parameters. This would require a new type of experiment that does not just measure initial rates but monitors fluorescence during the whole extension reaction - which we may not want to include in this paper. We should come to a conclusion on this because it affects how we will present the modeling and robustness analysis parts of the paper (they may be made shorter if we want to address the later issues in another paper). This will be easier to do after seeing the layout of the rest of the paper.

CJ 9/20/13

Attached please find a report on the experiments I ran this week (60C).

092013 CJ report.ppt

CJ 9/13/13

Attached please find a report on the experiments I ran this week (50C).

091313 CJ report.ppt

CJ 9/6/2013

Attached please find a report on the experiments I ran this week (55C).

090613 CJ report.ppt

CJ 9/5/2013

Attached please find an initial framework of the Taq extension kinetics paper, along with my comments. Please let me know how it should be revised. After figuring out the general structure of the paper I will start with the write-up.

Currently I'm also running the experiments for this paper, which would take ~4 weeks. Hopefully the simulation work and robustness analysis could be done during this time period.

Taq Paper CJ 090413.doc

RC: I will comment shortly. No further theoretical write up, simulation work or robustness analysis should proceed until then. Experimental parts can continue to be revised.

CJ 9/4/2013

Meeting with Karthik:

Plan for the extension paper:

(1) CJ will write a framework of the paper by the end of this week based on Sudha's previous manuscript.

(2) Karthik will post some references for the robustness analysis. CJ will read them first; then discuss with Karthik if there's any question.

RC: RC will provide needed references since there is work underway in the group on this.

(3) CJ and Karthik will then fill out the paper with expt and simulation results.

CJ 9/3/2013

Thanks Raj! I will work on the write up as soon as I get an electronic copy of Sudha's manuscript. I went through all the files uploaded on Wiki ever, but did not see that one. Karthik, would you kindly help me to find that file and send it to me?

Raj sent me a version in October and I don't know Sudha has updated that draft after this. Here is a draft that I have.

Thanks Karthik! This is exactly what I'm looking for. - CJ

Taq Paper Draft April 10-1.doc

RC 9/3/2013

KM, I have looked over the extension robustness outline. Thanks. Once we have settled the simulation plans, I will revise this as necessary, and also will revise/extend the BP paper robustness analysis, which was also based in part on Nagy's papers. As you know, in our group Andy has worked extensively on robustness analysis, including simulations using these approaches, and you should communicate with him regarding his experiences. The theory outlined below has been discussed and implemented in Andy's control work (see e.g. our slides on robustness analysis of qc and his current working papers). The Taylor approximation for the computational robustness analysis is useful primarily in an optimization context due to its speed. However, it is often inaccurate; Andy can give you details. We have developed methods in our group that are significantly more accurate for time-varying linear systems (stage 1 of PCR). I will comment on this, but before I can do this we need to settle some details of the scope of what we will be presenting in this paper. The presentation should be brief.

What I would like you to work on now is the detailed plan for how the simulations will be compared to the experiments:

- For this paper, there is no reason not to obtain the state variable uncertainties through simulation, since it will be more accurate. We should start setting up the code for this. It may be useful to talk to AK about it.

KM: Yes, we should obtain the state variables uncertainties through simulation and it was actually the original plan. It can be done in many ways.

1) By sampling the worst case parameters and solve the state equations.

2) Robustness analysis will give the distribution for the evolution of the state variables and it should talk about the uncertainty in the state variables.

Do you mean 1) when say that obtain the state variable uncertainties through simulation.

- CJ will be providing experimental uncertainties for the kcat/Kn parameter. Per our previous discussions, I believe this was to be the only uncertain parameter in our extension model, since we will be starting with fully bound enzyme. CJ and KM, please confirm. We should start by sampling from the normal distribution of this parameter value and computing the moments of the state variable.

Yes, we need only kcat/Kn parameter.

- We also need to decide on what will be the state variable of interest. I believe we agree that this should be the sum of concentrations of all E.Di's, since this is what can be measured experimentally. CJ and KM please confirm.

Yes,

- We need to decide on what experimental conditions will be used for this analysis. Are we planning to use current assay conditions, or PCR conditions? Are the conditions similar? How do they differ?

Ideally a rate constant is only a function of temperature. To start with we can use the current assay conditions but I think it would be appropriate to use the PCR condition for the comparison with the theoretical results. CJ can comment on the conditions.

- Are the assay conditions suitable for application of pseudo first-order kinetics (i.e., stage 1 of PCR)?

As stated above as long as we have a rate constant which is a function of temperature , it should be sufficient and we should not worry about assay conditions.

- For the theory part, are we planning to consider variable extension temperature or only constant extension temperature? The AK/RC method is most useful for variable extension temperature with pseudo first-order kinetics. It is more important to mention it in that case.

In the current plan, I have included the variable temperature. This is important, especially for the simultaneous annealing and extension.

CJ, please start by putting together all the materials we have so far regarding the experimental estimation of the MM (kcat/Kn) parameter, and whether/how we will be using Sudha's earlier work

on Km estimation.

This should be connected with the materials KM and I put together on the MM theory.

Then later you can add the theory on Mg chelation.

KM, please set a time to discuss these plans w CJ.

I will meet CJ on Thursday. Will send an email to CJ regarding this.

CJ 9/3/2013

Thanks Karthik.

"by fixing E.SP complex and Nucletide (dNTP) concentration (Need to make sure there is no SP) conduct an extension reaction at a fixed reaction temperature (may be 72 deg C). During the course of the reaction, measure the concentration of DNA (completely extended DNA or whatever the variable that can be measured with respect to time)."

This is exactly what I'm doing right now. By running such measurements I get reaction curve like this: